Introduction

In this final blog post, published two days before the election (Sunday Nov 3), I am going to explain how I prepared my final model and the results derived from it.

Before I do so, however, I want to share what is perhaps my main takeaway from this class thus far:

Predicting elections is hard.

In many ways, it is a somewhat impossible task. Forecasting elections is not difficult because procuring data is challenging or because training models is computationally expensive; rather, predicting the outcomes of US elections is fundamentally hard because there are relatively few elections from which we can construct our models.

Since (and including) the United States’ first election in 1789, there have been a grand total of 59 presidential elections (270 To Win). This means we only have 59 outcomes from which we can identify patterns and create a model to predict the two-party vote share at the national level. Once we consider that polling data only goes as far back as 1968, we are left with only 14 elections from which to base our models. While it could be tempting to believe that we actually have closer to 14 times 50 elections, or 700 elections, from which to make our models (if we used the election results from each state), this isn’t quite the case. Polling can be expensive, so generally, state-level polling studies are conducted in more competitive states, and we may not have sufficient polling data to produce models for all of our states in every election cycle. For this reason, we have relatively few outcomes, especially in recent years, from which we can produce models. This is an issue because, with limited testing data, we run the risk of overfitting, creating models that generalize poorly.

Though outcome data is a significant limitation, the same cannot be said of covariate data. Through lab sections, we have explored voter file data sets, turnout data sets, demographic data sets, macroeconomic data sets, polling data sets, campaign spending data sets, campaign event data sets, and other data sets on political fundamentals (incumbent candidate, June approval rate, etc.). There is an incredible volume of predictors that we could theoretically include in our model (national unemployment rate, mean polling averages, lagged vote share, etc.) We could even imagine breaking down these predictors: should we include the latest week’s polling average by state, or the latest month’s, or the average in the last year? Over the past several weeks, we have also explored a variety of regression tools including OLS, Bayesian priors, logistic regression, LASSO and Ridge, and other machine learning models.

One approach to handling this overabundance of covariate data and the various regression modelling techniques at our disposal is to use all of the outcome and predictor data that we possess, and then use cross-validation to determine which permutation of covariate features and machine learning models yields the lowest RMSE for predicting these outcomes. While this is tempting, it is also likely to produce a very complicated model that is highly sensitive to decisions in the validation process:

Do we equally weight the model’s ability to predict the winner of each state in each election year? Or do we weigh more recent elections more heavily? States with more electoral votes? More competitive states? States with more polls? How many polls must a state have for us to include it as an observation in our testing data set? Are all polls equally valid, or should we weigh them by their credibility?

Do we believe that Americans vote differently now from how they would have in decades past? Are voters less likely to vote for a candidate of a different party now than in the past? Do voters consider either candidate incumbent in 2024? Are voters more responsive to unemployment and inflation in some years compared to others?

For something that, at face value, might seem like a scientific prediction problem, the process of constructing a 2024 election forecast is, in my opinion, in many ways, a subjective art. We are making assumptions about voter behavior that could actually vary across years.

Given how much space there is for bias, I want to keep this model as simple as possible. This choice is a bold one, but I want to test how well a model with few covariates compares to other classmates’ more complex models models.

The code used to produce these visualizations is publicly available in my github repository and draws heavily from the section notes and sample code provided by the Gov 1347 Head Teaching Fellow, Matthew Dardet.

My Model

Both Sabato’s Crystal Ball of the Center for Politics and the Cook Political Report list the same seven states as “toss-ups.” Almost universally across news platforms and forecasting models, the following seven states are identified as the key swing states which will, most likely, determine the election:

- Arizona

- Georgia

- Michigan

- Nevada

- North Carolina

- Pennsylvania

- Wisconsin

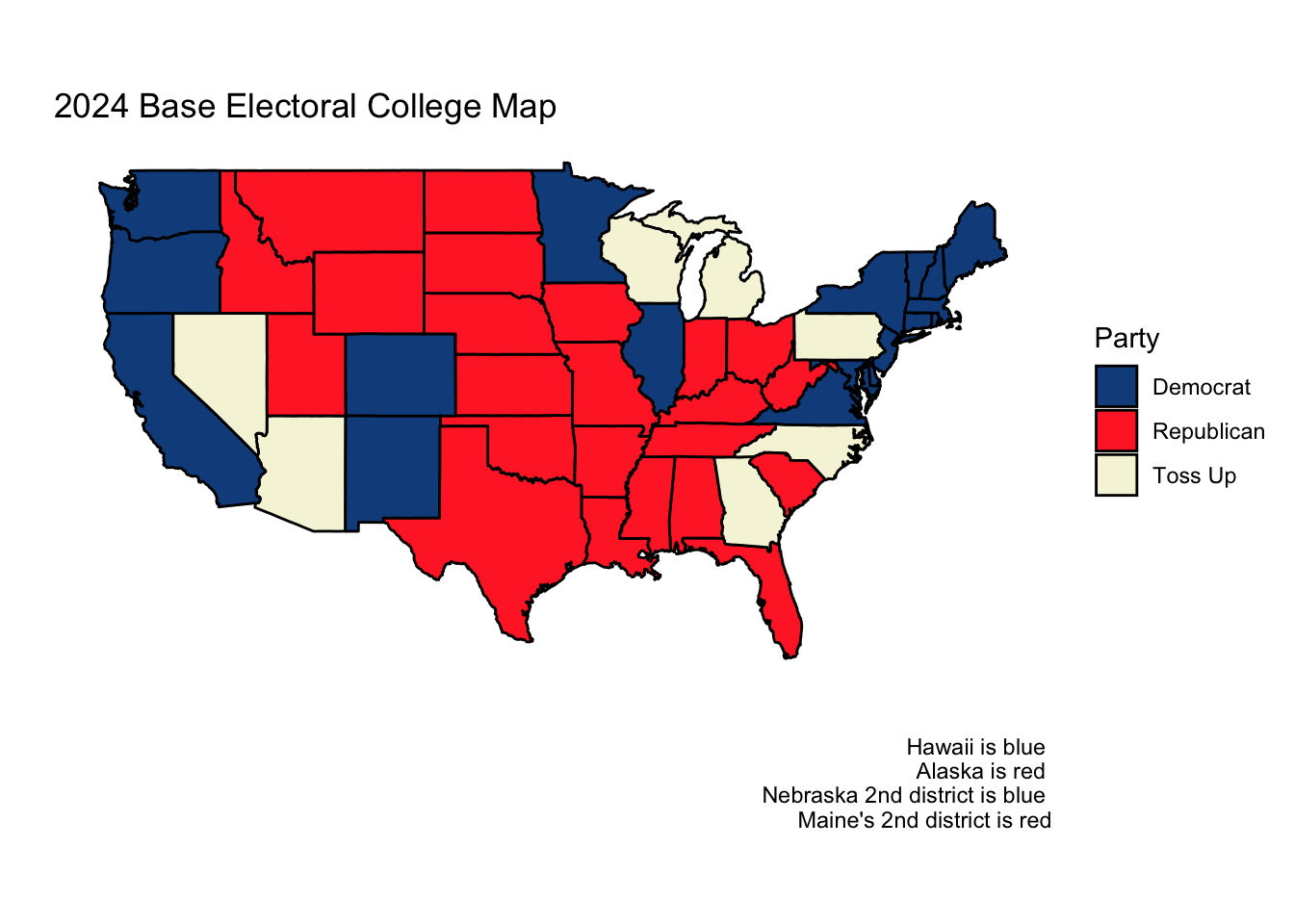

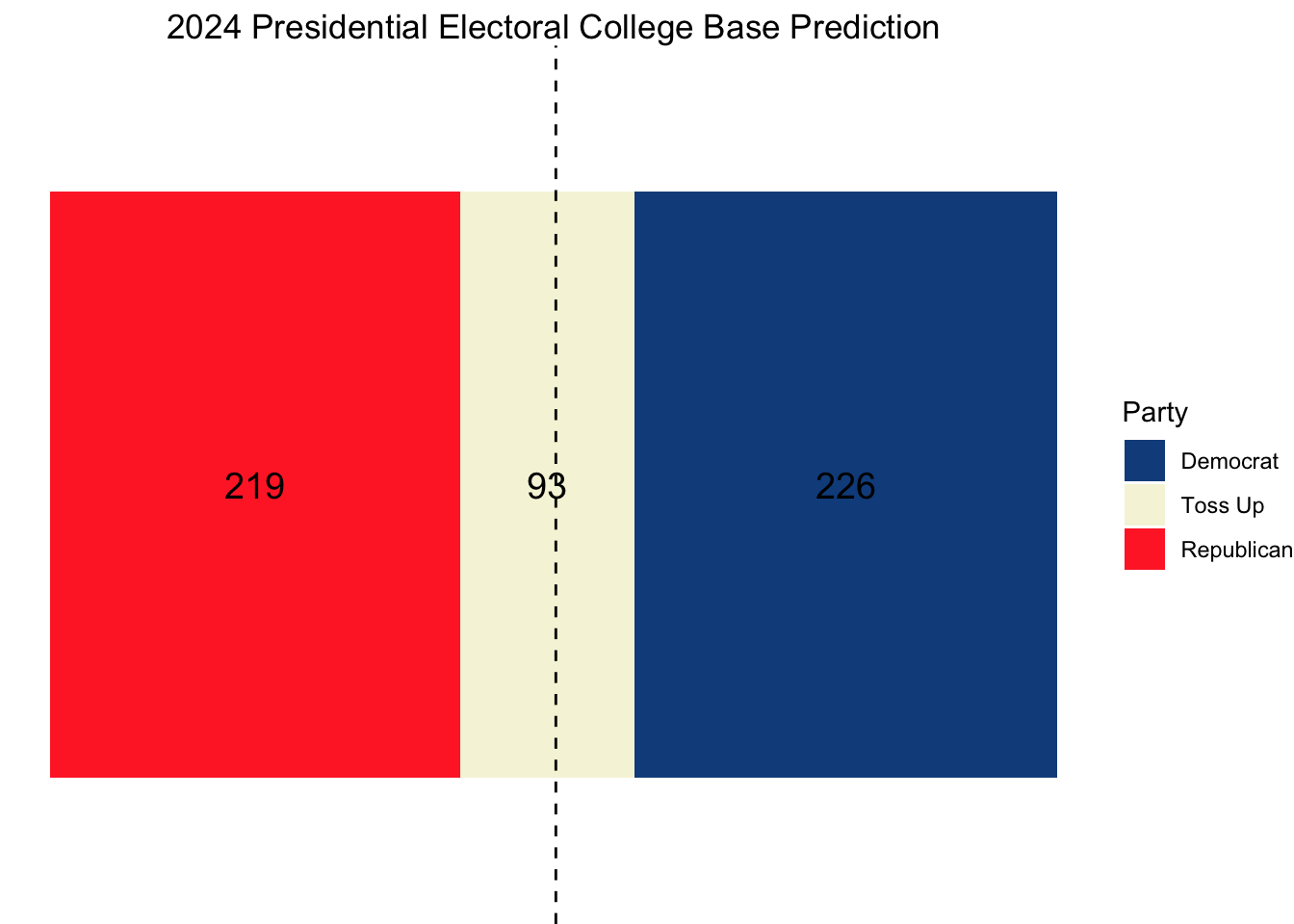

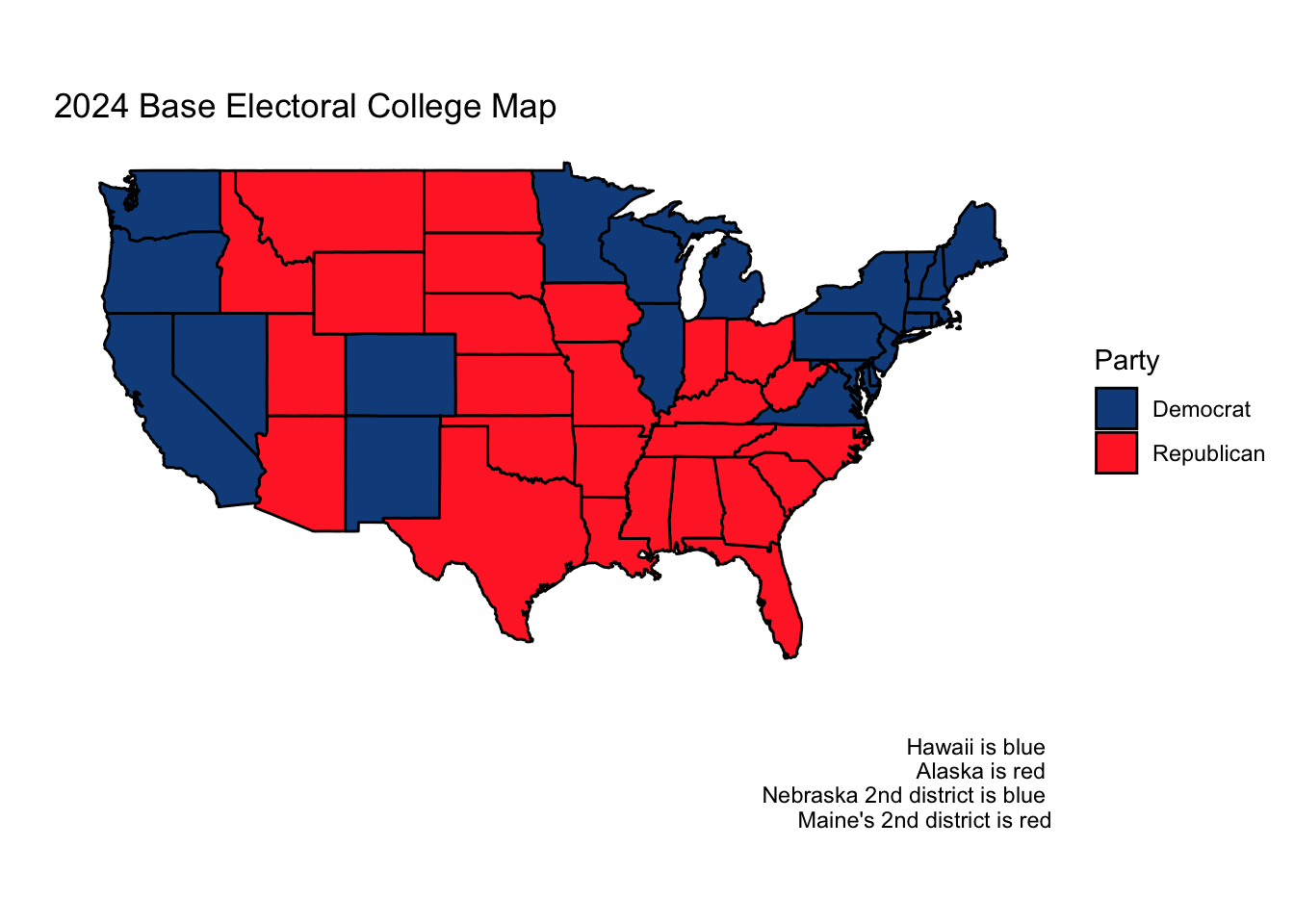

Interestingly, both of these preeminent forecasting websites, as of November 3rd, do not rank a single state as either lean Republican or lean Democrat. There are only “solid"s, “likely"s, and “toss-up"s. Thus, for the purposes of both this week’s final prediction, I will forecast the two-party vote share in each of these battleground states and assume other states and districts vote as they did in 2020.

This gives us the following initial electoral college map:

Preparing My Electoral College Model

Using national-level polling average data since 1968 from FiveThirtyEight, I will construct a model that uses the following polling features to predict the national two-party vote share.

- Polling average for the Republican candidate within a state in each of the ten most recent weeks

- Polling average for the Democratic candidate within a state in each of the ten most recent weeks

I am opting to only consider the polling averages within the last ten weeks of the campaign rather than the entirety of the campaign as I believe these to be most predictive of the ultimate election outcome. I am concerned that introducing averages from earlier periods would lead to overfitting, and, considering the unique candidate swap of 2024, I do not believe Biden nor Harris’s polling averages from the stage in the election before Harris could establish a proper campaign strategy are informative. These averages also occur after both parties have held their respective conventions and show the leanings of a more settled electorate. I will also be rescaling all polling averages so that the two-party vote share sums to 100. After doing this, I will only use Democratic polls to create a model (which is possible because Republican two-party vote share is just 100 - Democratic two-party vote share).

While there are a number of fundamental and economic covariates I considered exploring (whether a candidate is only a party incumbent, GDP growth in the second quarter of the election year, RDPI growth in the second quarter of the election year, unemployment rate in the second quarter of the election year, June approval rating, etc.), I found that my forecasts were highly variable depending on which fundamental variables I decided to include. It is my belief that many of the trends explained by fundamental variables (incumbency bias, high growth is good for candidates, high inflation is bad for candidates, etc.) is already baked into the polls, so I will focus on constructing a polls-only model.

We will train a two-party vote share model for to predice Democratic vote share, and then apply this model to our 2024 data to generate predictions.

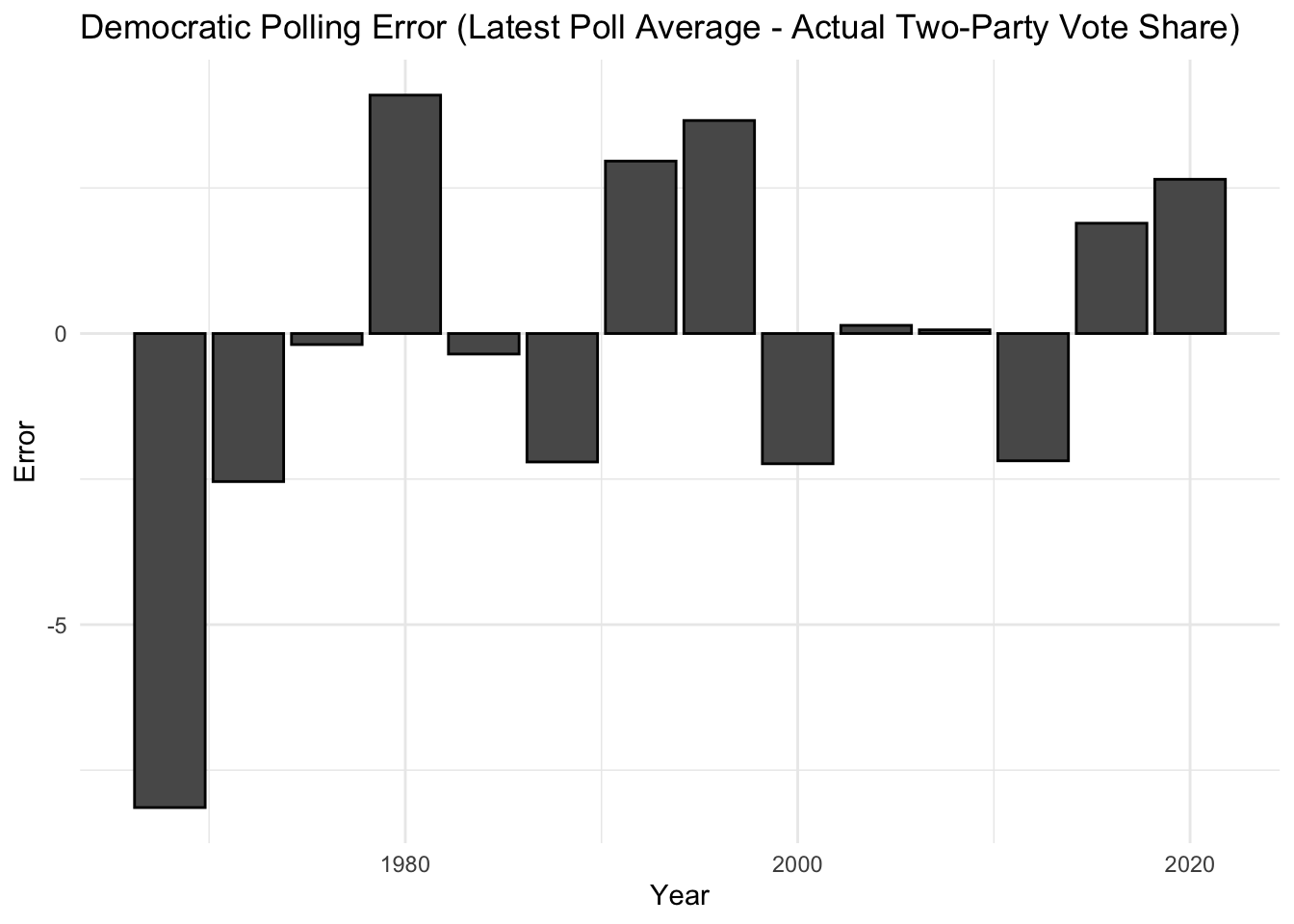

First, I want to assess whether modern national polls are systematically more accurate than older polls.

Because the year with the highest polling error was the first year in which polls were conducted in our data set, there is reason to think the science of polling was still developing. Since this outlier could skew the results of our regression and is plausibly not representative of current possibilities, I feel comfortable removing the year 1968 from our sample. Otherwise, the polling errors look reasonably uncorrelated with time.

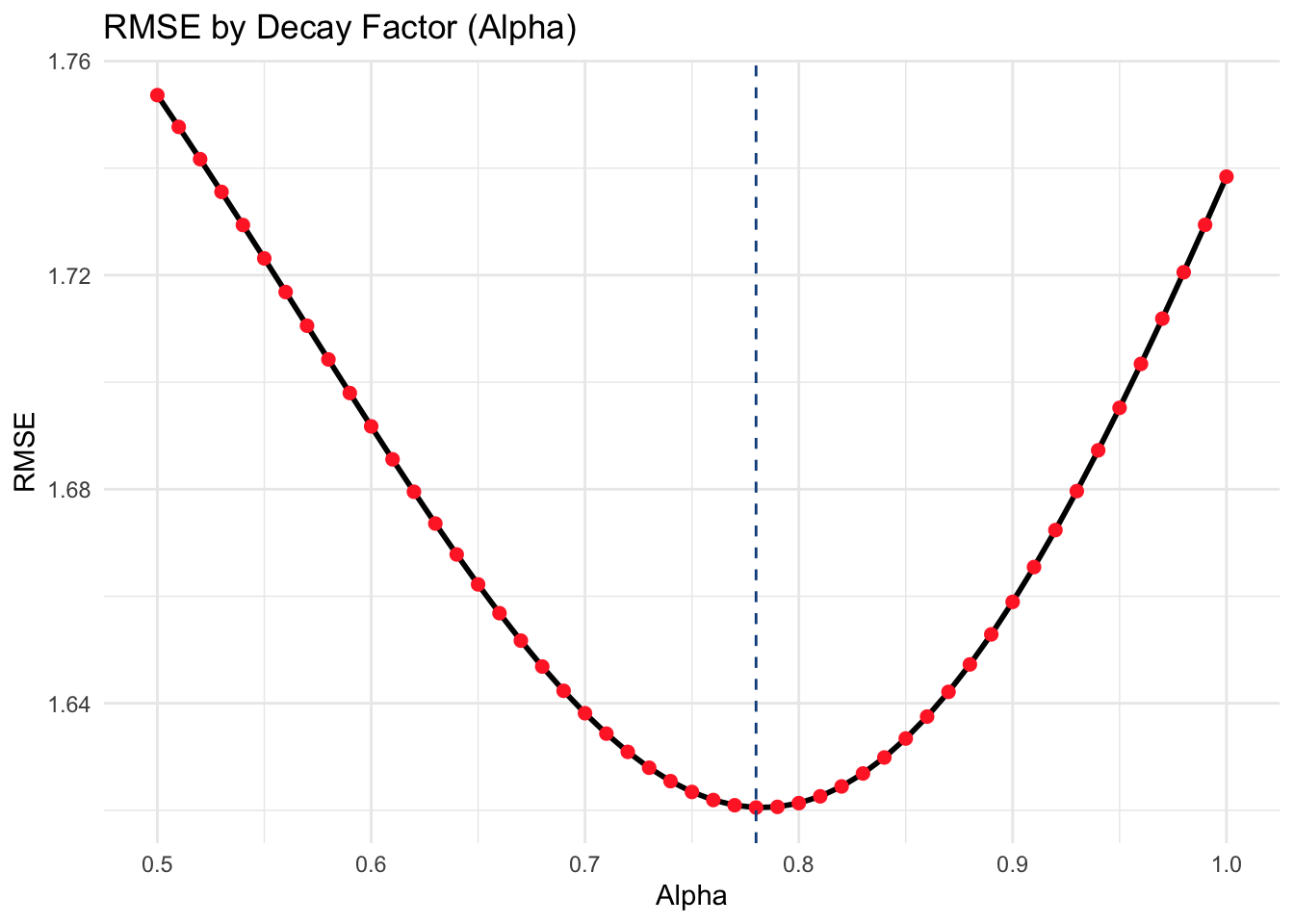

Now, I want to find the optimal way to average my week by week polling averages into an overall estimate for the national two-party Democratic vote share. Intuitively, I can assume that more recent polls are more accurate than less recent polls. I can model the weight of each column (week 1 through 10) with a decay factor, alpha, that compounds exponentially each week further away from week 1 that I get.

I will test all decay value within the set of {1, 0.99, 0.98,…, 0.51, 0.5}.

For each decay value, I will use leave-one-out cross validation to calculate the overall RMSE of a linear regression that estimates the two-party Democratic vote share using the weighted average vote share from each election cycle except the cycle that is currently being left out.

This process yields the following RMSE graph. As we can see, 0.78 is the optimal decay value with a root mean squared error of 1.62

This yields the following weights for each week before the election:

| Weeks Left Until Election | Weight |

|---|---|

| 1 | 1.0000000 |

| 2 | 0.7800000 |

| 3 | 0.6084000 |

| 4 | 0.4745520 |

| 5 | 0.3701506 |

| 6 | 0.2887174 |

| 7 | 0.2251996 |

| 8 | 0.1756557 |

| 9 | 0.1370114 |

| 10 | 0.1068689 |

For each fold, the out-of-sample squared error is shown below.

| Fold Left Out | In-Sample RMSE | Out-of-Sample SE |

|---|---|---|

| 1 | 1.455863 | 1.7409968 |

| 2 | 1.478439 | 0.0230401 |

| 3 | 1.169652 | 10.7116157 |

| 4 | 1.395906 | 3.7339550 |

| 5 | 1.479025 | 0.0000835 |

| 6 | 1.458749 | 0.8666411 |

| 7 | 1.461536 | 0.7915443 |

| 8 | 1.409345 | 2.6173176 |

| 9 | 1.471802 | 0.2778467 |

| 10 | 1.304142 | 6.4688561 |

| 11 | 1.310783 | 6.1094295 |

| 12 | 1.472901 | 0.2409560 |

| 13 | 1.465302 | 0.5564014 |

This model yields the following Harris-Trump national popular vote prediction.

| Year | Predicted Democratic Two-Party Vote Share | Predicted Republican Two-Party Vote Share |

|---|---|---|

| 2024 | 51.15365 | 48.84635 |

State-level estimates

I will now use this same decay factor to predict the two-party vote share for each of our battleground states. I chose to find this decay factor from national popular vote data because I have higher confidence in the consistent quality of national polls over time than the consistent quality of state polls over time, especially across states. It also seems like a reasonable assumption to me that this decay factor would remain largely constant over time.

For the same reasons as those I have already mentioned, I want to make this model as simple as possible and will only be considering the polling averages in each of the 10 weeks leading up to the election for each of our battleground states. I will use these 10 polling averages to construct a weighted average for each state. The formula for our weighted average (and our model) is as follows:

The weighted average for the Democratic two-party vote share for each state in 2024 is displayed below:

| State | Predicted Democratic Two-Party Vote Share |

|---|---|

| Arizona | 49.24514 |

| Georgia | 49.36078 |

| Michigan | 50.57455 |

| Nevada | 50.19946 |

| North Carolina | 49.56858 |

| Pennsylvania | 50.18314 |

| Wisconsin | 50.53039 |

Here, we can see that Arizona, Georgia, and North Carolina are favored to vote red while the other states are favored to vote blue.

For comparison, let’s see how our model would have fared for these same states in 2020.

| State | Predicted Democratic Two-Party Vote Share | Actual pv2p |

|---|---|---|

| Arizona | 51.96036 | 50.15683 |

| Georgia | 50.16966 | 50.11933 |

| Michigan | 54.11059 | 51.41356 |

| Nevada | 53.14477 | 51.22312 |

| North Carolina | 51.12033 | 49.31582 |

| Pennsylvania | 53.03826 | 50.58921 |

| Wisconsin | 53.73649 | 50.31906 |

All of our states would have been projected to vote blue, which was the case with the exception of North Carolina, which voted Republican. This is an RMSE of 2.24 percentage points.

Across the 442 state elections since 1968 in which we have polling averages for each of the ten weeks leading up to the election, our model correctly predicts the winner of the state 91.12% of the time with an RMSE of roughly 3.02 percentage points.

I will now use a simulation to get an estimate of how confident we are in these results. I will do this by sampling new state-level polling measurements for each of our 7 states 10,000 times, assuming a normal distribution around the current polling values with a standard deviation determined by the average distance of each state’s poll away from the actual outcome in the past two elections.

To create this standard deviation, I will take the average of the mean polling error of each state in 2016 and 2020 (weighted equally for both years) to capture the “Trump-era” polling error. This assumes that there is no systematic polling error in favor of either candidate, which is plausible given how the 2022 midterm favored Democrats. I will also use a stein adjusted error since I find it more realistic than simply averaging the polling error of a stat in the last two cycles.

| State | Average Error | Stein Adjusted Error |

|---|---|---|

| Arizona | 1.5458014 | 1.7387023 |

| Georgia | 0.4857592 | 0.9464523 |

| Michigan | 3.5667802 | 3.2491329 |

| Nevada | 1.1831851 | 1.4676916 |

| North Carolina | 2.3973182 | 2.3751053 |

| Pennsylvania | 3.1957976 | 2.9718696 |

| Wisconsin | 3.7910859 | 3.4167736 |

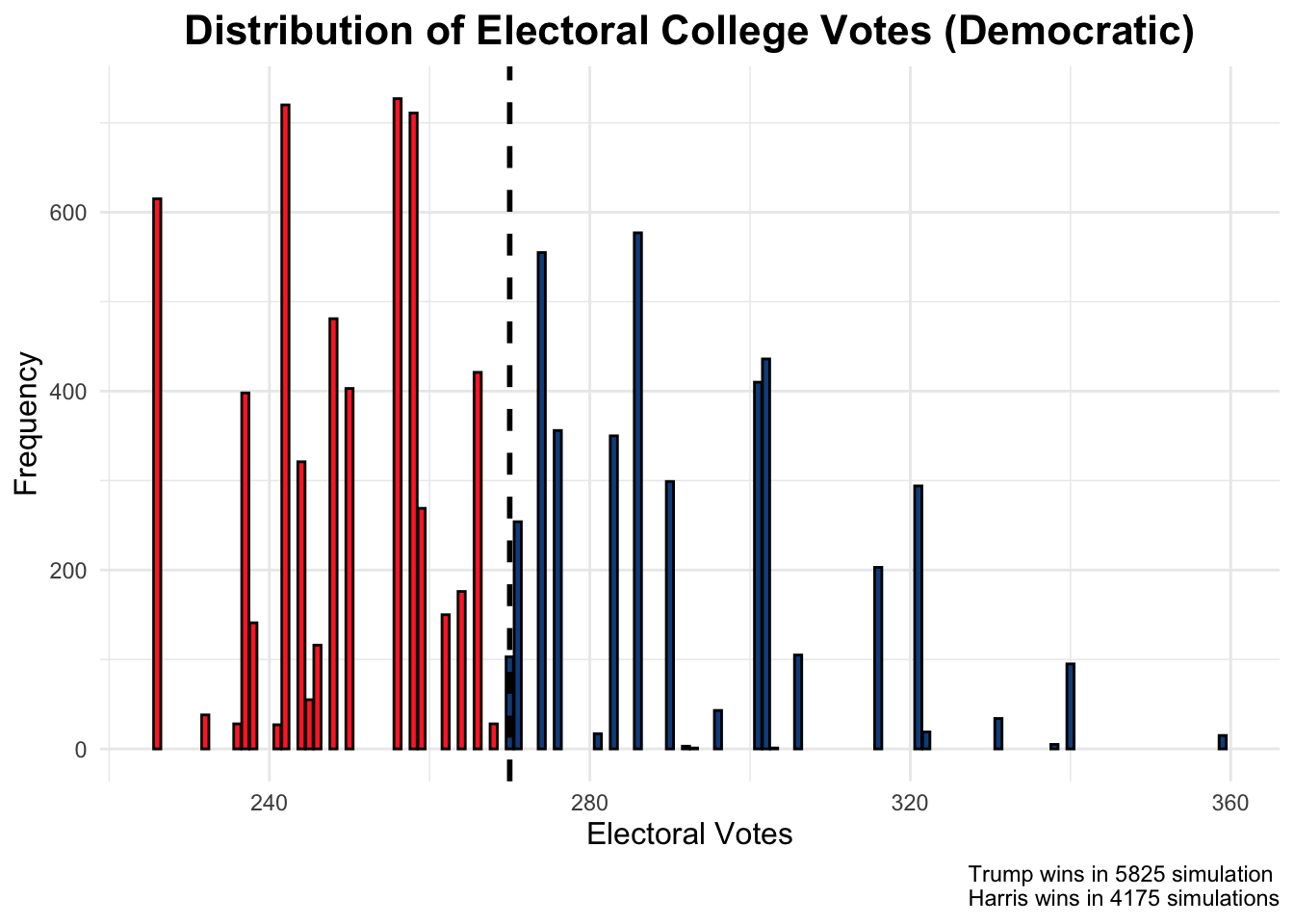

Using the above weighted errors as standard deviations yields the following simulation breakdown.

| State | D Win Percentage | 2.5th Percentile | 97.5th Percentile |

|---|---|---|---|

| Arizona | 26.84 | 45.90000 | 52.65498 |

| Georgia | 16.66 | 47.47985 | 51.19328 |

| Michigan | 60.81 | 44.21533 | 57.12646 |

| Nevada | 57.06 | 47.33077 | 53.03849 |

| North Carolina | 40.15 | 44.86851 | 54.21899 |

| Pennsylvania | 53.48 | 44.32085 | 55.93782 |

| Wisconsin | 59.62 | 43.84012 | 57.29537 |

We also get the following distribution of simulations.

This distribution is somewhat misleading, however, because it assumes that the outcomes of each of the seven battleground states are independent from one another (and that the polling errors across the states are not correlated with one another). For this reason, I will project the winner of the election based on which candidate is most likely to win each state. This distribution does show, however, that part of Harris’s weakness is that she is performing well in states with higher average polling errors.

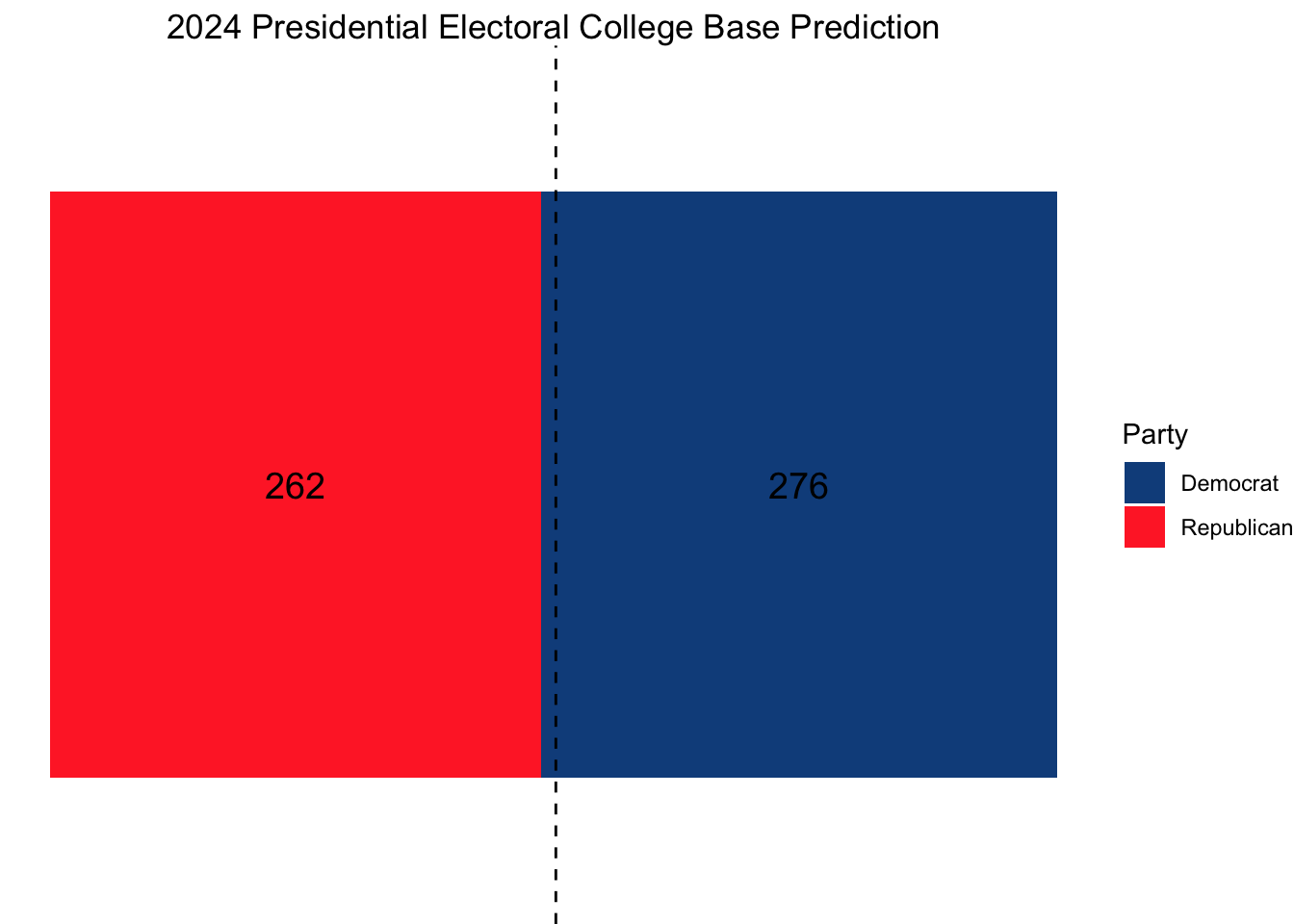

Projections

Using this model, our ultimate electoral college would look as follows, with Vice President Kamala Harris narrowly squeaking out a win.

Citations:

“Historical U.S. Presidential Elections 1789-2020 - 270towin.” 270toWin, www.270towin.com/historical-presidential-elections/. Accessed 3 Nov. 2024.

Data Sources:

Data are from the US presidential election popular vote results from 1948-2020 and state-level polling data for 1968 onwards.